Optical Character Recognition (OCR) technology has come a long way from simple text extraction. Today's advanced OCR solutions can intelligently extract structured data from complex documents, making them invaluable for automation workflows. In this post, we'll explore how Llamaindex's extraction and parse tools provide powerful OCR capabilities that can transform how you process documents in your automation workflows—while exploring our n8n template.

Understanding Llamaindex Extraction and Parse

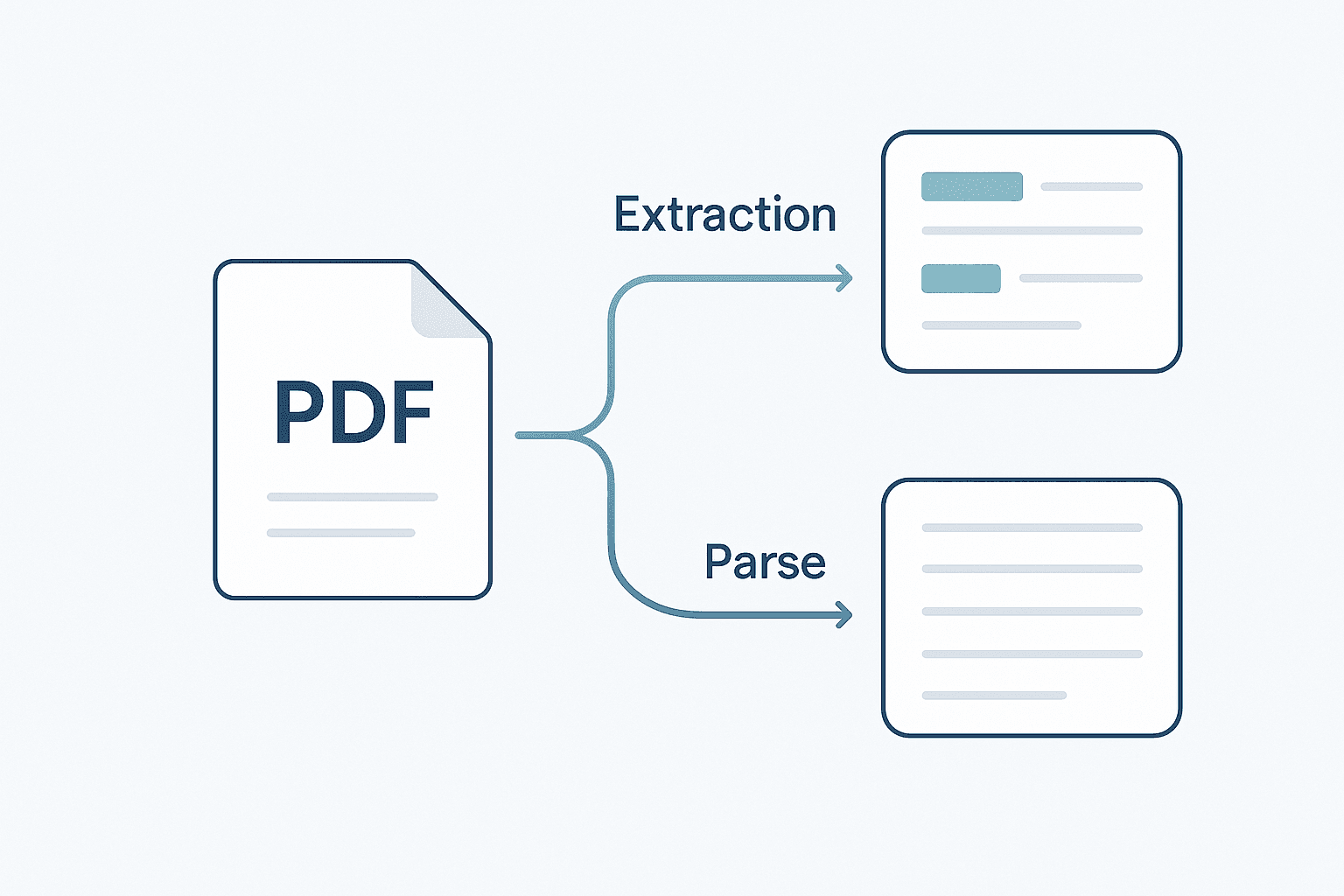

Llamaindex Cloud offers two powerful tools for document processing:

- Llamaindex Extraction: Allows you to define specific output formats and extract targeted information from documents. This is ideal when you need particular data points in a structured format.

- Llamaindex Parse: Extracts everything from a document (text, tables, images) and formats it for direct processing by Large Language Models (LLMs). This is perfect for comprehensive document analysis.

Both tools only work with PDF documents, so you'll need to convert other formats before processing.

When to Use Each Tool

- Use Extraction: When you need specific pieces of information from documents (like company details from pitch decks, or question-answer pairs from forms)

- Use Parse: When you want to process entire documents for LLM analysis, create vector stores, or build comprehensive knowledge bases

Setting Up Llamaindex Extraction in n8n

Let's walk through how to implement Llamaindex Extraction in an n8n workflow to automatically extract structured data from pitch decks.

The Workflow Overview

The process follows these key steps:

- Get the extraction agent ID

- Prepare the PDF file

- Create a file object

- Run the extraction job

- Poll for job completion

- Retrieve and process the results

Step 1: Getting the Extraction Agent ID

First, you need to identify which extraction agent to use. In Llamaindex Cloud, you can create custom extraction agents with specific schemas for different document types.

1curl -X 'GET' \ 2 'https://api.cloud.llamaindex.ai/api/v1/extraction/extraction-agents/by-name/{agent_name}' \ 3 -H 'accept: application/json' \ 4 -H "Authorization: Bearer $LLAMA_CLOUD_API_KEY"

This API call retrieves the agent ID by name, which you'll need for subsequent steps.

Step 2: Preparing the PDF File

Your document must be in binary format for processing:

// In n8n, use the HTTP Request node to fetch a PDF

// Then set the response format to 'File'

Step 3: Creating a File Object

Before processing, you need to create a file object in Llamaindex Cloud:

1curl -X 'POST' \ 2 'https://api.cloud.llamaindex.ai/api/v1/files' \ 3 -H 'accept: application/json' \ 4 -H 'Content-Type: multipart/form-data' \ 5 -H "Authorization: Bearer $LLAMA_CLOUD_API_KEY" \ 6 -F 'upload_file=@/path/to/your/file.pdf;type=application/pdf'

This creates a reference to your document that the extraction agent can work with.

Step 4: Running the Extraction Job

Now you can run the actual extraction job:

1curl -X 'POST' \ 2 'https://api.cloud.llamaindex.ai/api/v1/extraction/jobs' \ 3 -H 'accept: application/json' \ 4 -H 'Content-Type: application/json' \ 5 -H "Authorization: Bearer $LLAMA_CLOUD_API_KEY" \ 6 -d '{ 7 "extraction_agent_id": "{$AGENT_ID}", 8 "file_id": "{$FILE_ID}", 9}'

The payload includes the file ID from the previous step. You can also configure additional parameters like extraction mode or add a system prompt for more control.

Step 5: Polling for Job Completion

Extraction jobs run asynchronously, so you need to check when they're complete:

1curl -X 'GET' \ 2 'https://api.cloud.llamaindex.ai/api/v1/extraction/jobs/{$JOB_ID}' \ 3 -H 'accept: application/json' \ 4 -H "Authorization: Bearer $LLAMA_CLOUD_API_KEY"

In n8n, you can use a loop to check the status every 30 seconds until it's no longer "pending" or "in progress".

Step 6: Retrieving the Results

Once complete, you can fetch the structured data:

1curl -X 'GET' \ 2 'https://api.cloud.llamaindex.ai/api/v1/extraction/jobs/{$JOB_ID}/result' \ 3 -H 'accept: application/json' \ 4 -H "Authorization: Bearer $LLAMA_CLOUD_API_KEY"

This returns your data in the schema format you defined when creating the extraction agent.

Creating Powerful Extraction Schemas

The real power of Llamaindex Extraction comes from its ability to extract data according to custom schemas. These schemas define exactly what information you want and how it should be structured.

For example, a pitch deck extraction schema might include:

- Company name and location

- Founder information (name, title, background)

- Technology details

- Market trends

- Financial projections

You can create these schemas manually or let Llamaindex generate them by providing example documents and an extraction prompt.

Schema Features

- Required fields: Mark fields as required to ensure they're always included

- Complex data structures: Support for arrays, nested objects, and various data types

- Field descriptions: Add context to help the AI understand what to extract

Business Applications

The structured data from Llamaindex Extraction opens up numerous possibilities:

- Database Population: Automatically extract information from documents and insert it into your CRM or other databases

- Investment Analysis: Process pitch decks to extract key metrics for investment decisions

- Form Processing: Extract question-answer pairs from forms or surveys

- Document Categorisation: Extract metadata to automatically categorise and route documents

- Compliance Checking: Extract specific fields to verify document compliance with regulations

Practical Example: Pitch Deck Analysis

In our demonstration, we extracted structured data from a pitch deck, including:

- Company details (name, location)

- Founder information (names, titles, backgrounds)

- Technology specifications

- Market trends

This structured output is perfect for:

- Storing in databases

- Feeding into other AI systems for further analysis

- Creating comparison reports

- Automating investment screening processes

Tips for Effective Implementation

- Start with clear schema definitions: The more precise your schema, the better your results

- Test with representative documents: Use real examples to refine your extraction agents

- Consider required vs. optional fields: Mark fields as optional if they might not appear in all documents

- Use the credit system wisely: The free tier includes 10,000 credits, so monitor usage to select the right plan

- Consider webhook integration: For production workflows, webhooks can be more efficient than polling

Conclusion

Llamaindex Extraction and Parse represent significant advancements in document processing technology. By combining these tools with n8n workflows, you can automate the extraction of structured data from documents, eliminating manual data entry and accelerating business processes.

Whether you're processing investment documents, analysing forms, or building knowledge bases, these tools provide a powerful foundation for document automation workflows that save time and improve accuracy.

The ability to transform unstructured documents into structured, machine-readable data opens up new possibilities for automation and analysis that were previously impractical or impossible with traditional OCR approaches.